Overview

We conduct a systematic evaluation of various white-box adversarial attacks, where the attacker has complete visibility into the model’s architecture, parameters, and training data, on generic neural network models for images. We use datasets such as MNIST, CIFAR-10, CIFAR-100, and Fashion MNIST processed by a Convolutional Neural Network (CNN). We identify the intrinsic vulnerabilities of CNNs when exposed to white-box attacks such as FGSM, BIM, JSMA, C&W, PGD, and DeepFool.

Research Objectives

- Evaluate CNN robustness against advanced white-box adversarial attacks.

- Compare the impact of single-step vs iterative attacks.

- Analyze correlation between image quality metrics and classification accuracy.

- Provide insights for developing robust CNN defense mechanisms.

Methods

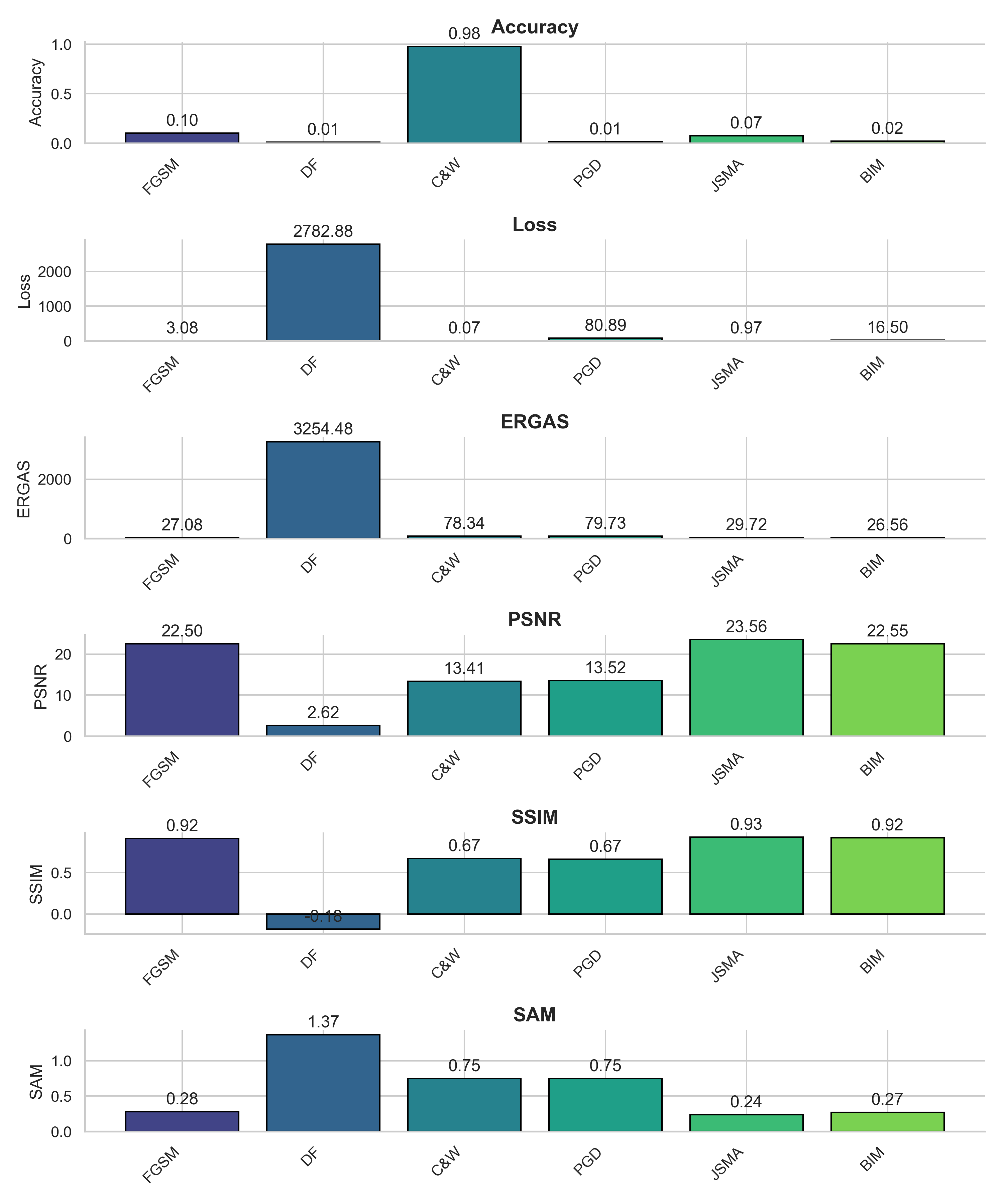

We investigate the effects of various sophisticated attacks — FGSM, BIM, JSMA, C&W, PGD, and DeepFool — on CNN performance metrics such as loss and accuracy. We analyze multiple image datasets (MNIST, CIFAR-10, CIFAR-100, Fashion-MNIST) to provide a comprehensive comparison. We also explore how image quality metrics (ERGAS, PSNR, SSIM, SAM) correlate with classification performance.

Results & Visualizations

Impact

Our study highlights significant vulnerabilities in CNN models when subjected to adversarial attacks. It emphasizes the need for robust defense strategies to ensure reliable deployment of CNNs in real-world critical domains such as autonomous vehicles and healthcare diagnostics. We provide a foundation for future work on adaptive CNN defense mechanisms and explainability-driven adversarial robustness.